In January, TABPI conducted a survey of b2b (trade publication) editors from across the world, inquiring about how Artificial Intelligence products, such as ChatGPT, are being used in editorial and design work, whether they are effective, and what the future may hold for the industry. In Part 1 of this report, we looked at some of the use of AI, the impacts and challenges faced by editorial and design staffs, ethical concerns, and the future use. Here, we’ll finish up with some insights into privacy and performance, recommendations for better use of AI, and what this means for B2B journalism between now and the end of this decade.

Use, privacy, and performance

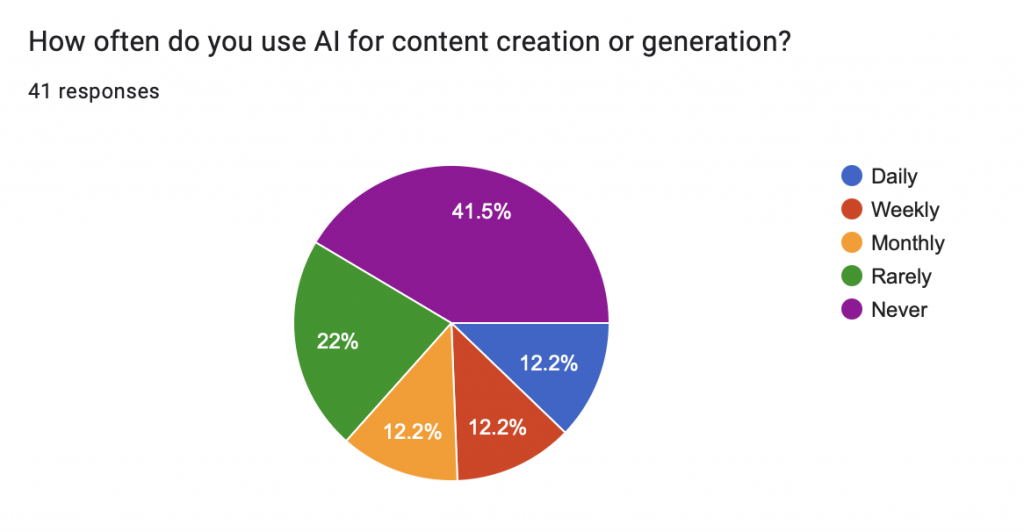

Although 61% of respondents said that they use any sort of AI technology and tools in their editorial and/or design processes, the frequency of use varies. When asked how often they use AI for content creation or generation, 12% reported that they use it daily, 12% reported that they use it weekly, 12% reported that they use it monthly, and 22% reported that they use it rarely.

Although 61% of respondents said that they use any sort of AI technology and tools in their editorial and/or design processes, the frequency of use varies. When asked how often they use AI for content creation or generation, 12% reported that they use it daily, 12% reported that they use it weekly, 12% reported that they use it monthly, and 22% reported that they use it rarely.

And while some reported that they use AI to track and analyze performance metrics of editorial content, the results haven’t been too promising thus far. A mere 5% reported that it had significantly improved their ability, with 8% saying it had moderately improved, and 5% saying only slightly. That means roughly 82% said it had not improved this task at all.

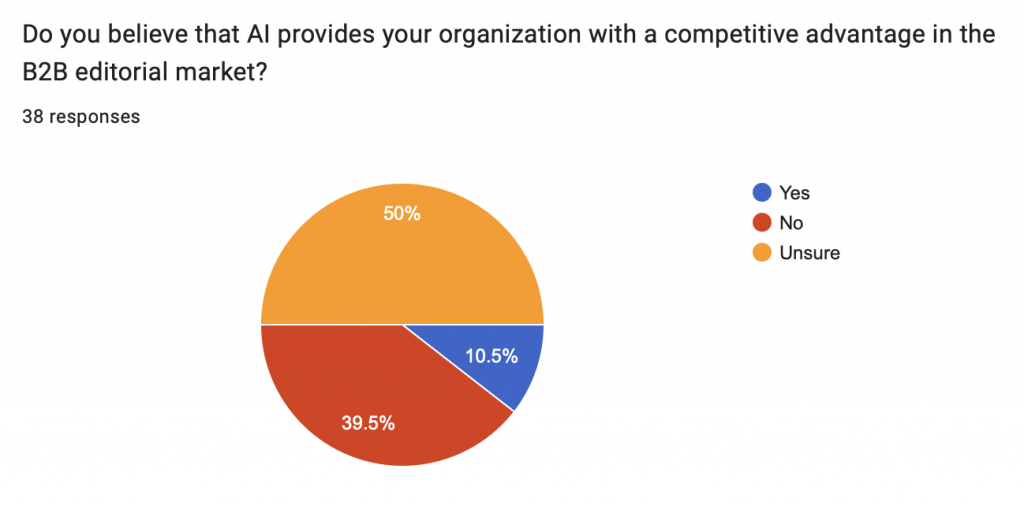

Also surprising was that only 11% of editors said that they believed that AI use provided their organization with a competitive advantage; 40% said it did not, with roughly half of respondents being unsure.

Also surprising was that only 11% of editors said that they believed that AI use provided their organization with a competitive advantage; 40% said it did not, with roughly half of respondents being unsure.

As reported in Part 1 of this report, being this early on in a new technology paradigm means that there aren’t a lot of corporate structures in place for dealing with the use of AI. On a practical level, this means that there aren’t many standard ways for organizations to collect feedback from editors and designers and adapt AI tools and use where necessary. We asked about how any such feedback is currently working.

Editors told us that this process is incredibly informal if anything is even in place. Much of the feedback occurs during corporate meetings, through one-on-one correspondence, or in working groups. And platforms seem to be chosen on an ad-hoc basis most of the time. 38% reported that these choices are made based on recommendations from others or via staff trial and evaluation. Things like corporate cost considerations were only mentioned by a few of the respondents.

Almost half of the respondents said they were neutral when asked if they were satisfied with the performance of the AI tools that they currently use. 29% reported being satisfied and 12% are very satisfied, while another 12% report being dissatisfied.

Almost half of the respondents said they were neutral when asked if they were satisfied with the performance of the AI tools that they currently use. 29% reported being satisfied and 12% are very satisfied, while another 12% report being dissatisfied.

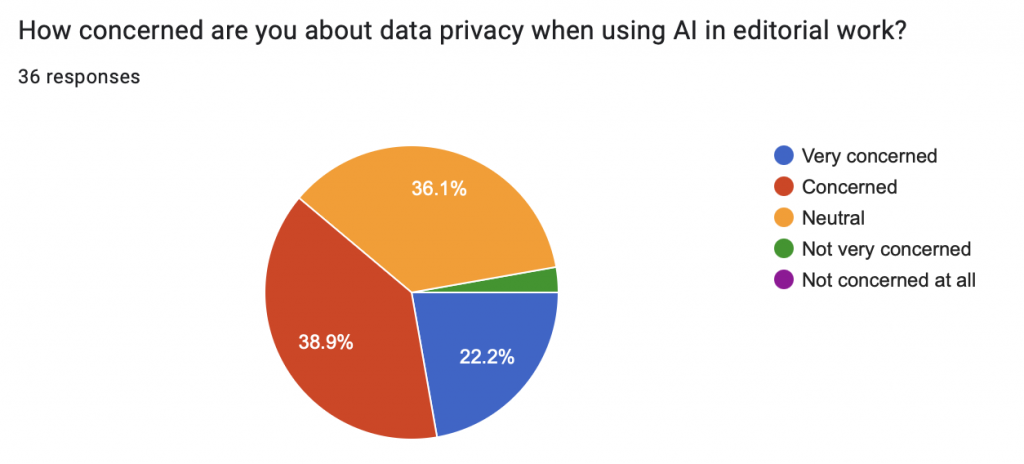

Many editors are uneasy about feeding a program like ChatGPT an article they have written for it to suggest improvements — does that original content now become part of the wider AI source material that has been scraped from numerous sources around the globe? We found that 36% reported that they were neutral when asked about data privacy concerns when using AI for editorial work. Roughly 39% said they were concerned and another 22% are very concerned. A small percentage (3%) reported being not very concerned.

Recommendations for staffs

AI’s most valuable benefits appear to be brainstorming ideas (via generative tech) and grammar checking. Respondents said that it must not be trusted for anything approaching final results, but instead, keep its use very targeted.

“For us, automation of the processes is where we will see the most benefit. Most of our brainstorming sessions are without AI, but we do recognize its potential in this regard. So, automating the process for editorial calendars in relation to trends will help,” said one.

“Be very specific about what functions the technology needs to perform otherwise it may not produce the most desirable results,” said another editor.

B2B and AI in 2029

Some editors thought AI technology would have a bright future in the coming years. One noted that advances in technology should lead to greater use of AI in fact checking, copy editing, and generative tasks such as headline writing, article summaries, and even AI-created art for magazine covers or infographics.

“I think editors will need to reimagine their role,” said another respondent. “To me, the biggest ethical consideration relates to the expectation that AI tools will cause substantial disruption to the editorial field in the coming years and the content landscape. While there are potential ways to misuse AI tools to either do superfluous research or to pass off largely AI-written content as one’s own, a much bigger question to me relates establishing a cohesive strategy on how to best integrate human critical thinking, research, interviewing skills with AI tools to help parse data sets, help with fact checking (with internet-connected tools to help with research), etc. As the tools evolve, they will be able to replace more human skills, shifting humans’ work. The chief ethical consideration here is to ensure that the company as a whole is carefully thinking and exploring a variety of tools to get a sense of how they will impact editors’ lives in the coming years.”

Another said they can see it being used to better predict what types of topics and content a publication’s readership resonates with and is interested in — and therefore be used as one tool of many to enhance the production of high-quality, human-driven editorial work.

“I think publications that have lost editors or writers to AI will regret that decision and editors will be reinstated to do what they do best. AI cannot replace editors or writers.”

[Full transparency: TABPI used ChatGPT to develop a first draft of the survey questions, which were extensively modified by a human editor before being finalized.]